More than a century ago, if there had been the acronym CEO at the time, it would have stood for Chief Electricity Officer. The electricity at the time had become an essential taxable ingredient of the manufacturing industry, however, due to its existence in the form of direct current, electricity could not be transmitted far before its energy dissipated within metal wires. Back in the 1800s, individual businesses built their own power generators. Sitting next to a company, whether it was a steel mill or a factory, was its own power plant (Figure 1). Only after Nikola Tesla invented alternating current at the end of 1800s, long-distance electricity transmission became feasible and gradually led to the modern electrical grid. Today nobody worries about electricity generation, given a new electronic appliance, we simply plug and play.

As far as it relates to computers, the term "cloud" dates back to early network design, when engineers would map out all the various components of their networks, but then loosely sketch the unknown networks (like the Internet) theirs was hooked into. What does a rough blob of undefined nodes look like? A cloud (Figure 2). Imagine I am giving this Cloud Computing presentation in a company's conference room, with PowerPoint slides opened from a file hosted on a server sitting within company's data center. For our sake of understanding how the file was transferred to the presenting computer and how video signal is sent to the overhead projector, the exact server and network architectures within the data center is irrelevant, therefore, we can simply represent our data center as a Cloud symbol.

High Availability & Fault Tolerant

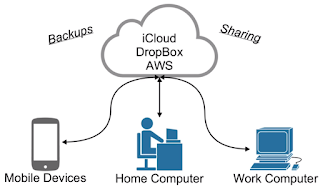

By using network resource versus a local device, data is available on multiple devices and remains available when some device or cloud component went down (due to built-in redundancy) (Figure 3). However, these are just necessary conditions for the Cloud definition, not sufficient conditions. The reason is we mostly gain these two benefits by using our company's data center, but our data center is not a Cloud. Then what are the unique characteristics of the Cloud?

| |

| Figure 3. But storing data remotely in the Cloud, we gain high availability and fault tolerance. (credit:https://www.youtube.com/watch?v=LKStwibxbR0&list=PLv2a_5pNAko2Jl4Ks7V428ttvy-Fj4NKU&index=1) |

Scalability

In this big data era, we are all facing the explosion of data, the only difference is the extend varies. IT departments in all companies are busy managing constantly-growing demands for larger storage capacity, more powerful databasing, and subsequently more computing power.

Imagine we are a small startup company that has 3 servers supporting 1000 users in 2016. In 2017, our user base has grown into 5000 and we doubled our servers to 6. Our projection for 2018 is 20,000 users, therefore, we propose a budge to buy 9 more servers. We need to work on a convincing proposal, manage to get capital approval, obtain quotes from multiple vendors and go through the procurement process, find/build additional rack space in our data center, wire the hardware, install and configure software ecosystem on these servers. Even for the most efficient companies, the process can easily take months, besides the large lump-sum capital investment. Scalability is what a company's data center cannot provide. Using the electricity analogy, this headache exists because we are running our own power generator.

|

| Figure 4. On-premise scale up is costly both financially and time wise. (credit:https://www.youtube.com/watch?v=LKStwibxbR0&list=PLv2a_5pNAko2Jl4Ks7V428ttvy-Fj4NKU&index=1) |

What if we can go to a Cloud provider instead, with a few clicks, we specify the CPU, memory and storage requirements for our nine servers, as well as the operating systems we desire. In a few minutes, the servers are up and running and yet better, we only pay less than one dollar per hour for each of them, no more capital budget required. That is what Cloud Computing promises and that fundamentally changes the way we think and use computing resource, more towards the plug-and-play utility model.

Elasticity

Growth is always hard to be predicted accurately. In the previous example, what if our user based will not grow to 20,000 but only 7,000 next year? Eight out of nine servers are in waste, this is especially undesirable, as seeding fund is particularly limited for our little startup company. Even if we made the accurate prediction, the nine servers are only gradually come into use as we reach the end of 2018. So on-premise computing infrastructure cannot be sized down. As the scalability limitation previously mentioned is often addressed by overshooting the resource needs, waste is hard to avoid.

|

| Figure 4. On-premise scale down is costly due to the waste of resource. (credit:https://www.youtube.com/watch?v=LKStwibxbR0&list=PLv2a_5pNAko2Jl4Ks7V428ttvy-Fj4NKU&index=1) |

With our Cloud provider, since the overhead of getting a new server is so light, our server farm only scales up when we really need to, thus avoid the need to size down.

Let us give another example to illustrate the importance of elasticity. Retails such as Walmart earn their revenues mostly in the last two months of the year. If its in-house IT department purchases a battery of servers in order to accommodate the computing needs in busy November and December, those resource will sit in idle when January hits and until the next shopping season. With Cloud provider, it can shut down and return any extra computing resource in January and immediately stop paying for them.

The Cloud provider can absorb the risk of scalability and elasticity by the economy of scale. Just like power plants form a giant electrical grid to balance power consumption, Cloud provider leverage the fluctuations among the needs of its tenants and in a much better position to manager to provide or take back resources on demand.

What is Cloud?

Therefore, the Cloud can be viewed as a virtually infinite storage and computing resource, where we can size up and down as we wish and we only pay for what is really being used. It is just like our plug-and-play model on electricity usage. The Cloud leads to efficiency by eliminating the operational overhead of scale up, as well as significantly reducing the cost by saving up-front investment and avoid size-down waste.

However, Cloud Computing is not entirely plug-and-play yet. The Electrical Grid has been polished over the century to provide a set of standard parameters: voltage and frequency. But the computing resource is much more complicated to define. Computing resource relies on software ecosystem, where we do not yet have a standardized platform. Linux, Windows, OSX, iOS, Android all have their own advantages for specific applications and there is no one-size-fit-all for the near future. In addition, Cloud Computing is provided remotely via the Internet, network latency is rather significant not due to the speed of light, but rather due to the relay of our data package over dozens of servers sitting between us and the Cloud provider. When Cloud computing needs to be fueled by our own data (which is not a case for electricity), it probably does not make sense to transfer terabytes of data into the Cloud just for a short computation.

Last but not least is the pace of innovation. Innovations in electricity generation and distribution happens on the scale of decades or centuries. In contrast, Moore's Law is measured in months. As Cloud is largely a technology, not a science, what survives is not necessarily the most correct one, but usually the most popular one will eventually become the standard. Cloud is at its nascent stage, which means there are lots of new technologies come and go, constant learning is the key. So which Cloud platform is winning at the current point? At this point, Amazon Web Service (AWS), Microsoft Azure, and Google Cloud Platform are the three largest Cloud providers. AWS pretty much dominates the market, especially in terms of providing raw computing resource (IaaS, Infrastructure as a Service), therefore we will briefly explain the main concepts of AWS.

Amazon Web Service (AWS)

AWS has data centers in many geo-locations (Figure 5). Each center represents a "region", e.g., we have a region in San Francisco, one in Oregon, another in Virginia, etc. Each region is a circle on the world map. For a company to use AWS, it should first choose a region to physically establish a virtual data center, called Virtual Private Center (VPC). Within a region, say Oregon, there are multiple data centers called Availability Zones (AZ). Oregon has three AZs, called a, b, and c. Each AZ (data center) consists of racks and racks of computers and AWS allocates and retakes these computers to/from different tenants based on their demands. Our VPC is a logical concept, when it first created, it contains no computer at all. Only when we request one, AWS take a free instance and virtually places it into our VPC (more just label it in its resource management database). To use a VPC, we need to first create a few data structures called subnets, in order to pre-allocate our IP address space to avoid future confliction. Each subnet has to be on one AZ (cannot span AZs), although we draw an orange box in Figure 5 to represent a subnet, a subnet actually consists of computers randomly allocated by AWS on-demand scattered around within that AZ. This is Cloud, so we do not care about physically where our computers are and how they are networked, it is sufficient for us to imagine we have one virtual data center, consists of a few sub-networks that we can apply different routing and network security settings on each. E.g., a subnet for our database servers that do not need to talk to anything else except web servers, and another subnet hosting web servers that can talk to the database subnet and also to the Internet for processing http requests, etc.

In Figure 5, we create a VPC in Oregon and pre-allocate four subnets, each has an unique IP range for holding up to 256 computers (251 to be precise due to some reserved IPs), say 10.0.0.*/24, 10.0.1.*/24, 10.0.2.*/24 and 10.0.3.*/24. Logically, we can imagine any computers we launched within our VPC are on one giant local Ethernet (LAN) and can talk to each other as routing permits.

For data scientists to use AWS, it does put burden on us learning some basic skills that we used to rely on our UNIX administrators. Cloud Computing is most effective when we use a self-serving model, otherwise, relying on a ticketing system handled by Cloud Administrator, we will again have to wait for hours or days for a resource we could have obtained within minutes. Cloud Computing is here to stay and it is definitely the future, therefore, it is worthwhile for data scientists to learn the basics of AWS.

Some basic tasks include how to spin up an computer called EC2 instance, how to "sudo" install the packages needed (say Python3) and how to install modules (say use python "pip"), etc. But do not be scared, to compile and/or install a software package, we have the freedom to specify the hardware, to choose the operating system and a release we need. This is a luxury in-house UNIX administrators dream to have. Furthermore, we can even take advantage of pre-packaged software containers (such as Docker containers) or even use a whole Amazon Machine Image (AMI) to provision a machine ecosystem. This is not an AWS usage tutorial, AWS is best learnt through hands-on lab sessions, which is not covered in this short blog.

|

| Figure 5. The high-level architecture of a company's virtual data center in AWS. |

We here explain what Cloud Computing is and I hope you can now appreciate Cloud is not simply a buzz word, but it brings us a data engineering revolution, just like going from using a power generator to relying on an electrical grid.

Reference

Information are complied based on a few resources:

- Video: AWS Concepts: Understanding the Course Material & Features

https://www.youtube.com/watch?v=LKStwibxbR0&list=PLv2a_5pNAko2Jl4Ks7V428ttvy-Fj4NKU&index=1 - https://cacm.acm.org/magazines/2010/5/87274-cloud-computing-and-electricity-beyond-the-utility-model/fulltext

- https://www.theatlantic.com/technology/archive/2011/09/clouds-the-most-useful-metaphor-of-all-time/245851/

- https://www.quora.com/How-is-cloud-computing-analogous-to-electricity-grid